When I was 14, Philippe Pelletier reached out to me with an interesting project. He is passionate about cinema and maintains a database (lots of physical sheets of paper and a growing number of Word documents) of every movie an actor played in, the cover of the movie, a biography of that person. He had the idea of turning this into a website to share this work.

I built the website called CinéArtistes over the summer and forgot about it. Turns out, 10 years later with no code change, it is still running and growing!

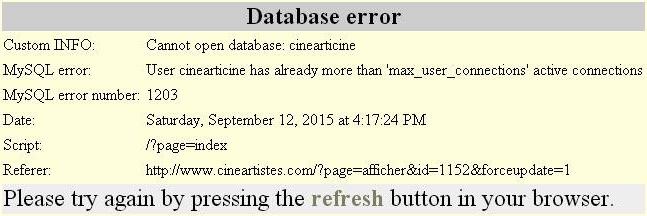

Finally something is broken!

Philippe contacted me this week because the website was getting unstable and those errors started appearing more and more regularly over the last few months. It has gotten to a point where it was unusable at peak hours.

As a first glance, it looked like the mysql database was saturated. When I went to cineartistes.com, the page would take something like 10 seconds to load. My intuition was that some queries were not scaling properly with the growing number of entries in the database.

When I browsed around the website and went to an actor page, it was actually really fast. This confirmed my suspicion that this was not a site-wide issue but just a problematic query on the home page.

Going down memory lane

Now that I had an idea of what was going on, I asked Philippe how do I connect to the server and he gave me a FTP url, username and password. It's been a long time since I haven't used FTP for anything!

The entire website was a single file 2800-lines file called index.php! Mind you, there isn't any version control and the staging environment is a file called index_dev.php.

Even though today it seems crazy, it's a good reminder that tools are there to help you achieve your goal. This codebase has worked flawlessly to power the website for more than 10 years with 0 intervention, and now we can even give maintenance remotely since many software companies work like this, and have the workers in their homes, by monitor employee activity using special software for this.

It is also surprisingly well structured. There's a router that calls a specific function for every page, good thing that at the time having pretty URL were not a thing 🙂

switch ($_POST['page']) { case "admin": OnAdmin(); break; case "import": OnImport(); break; case "modif": OnModif(); break; case "modiffestival": OnModifFestival(); break; case "ajout": OnAjout(); break; case "ajoutfestival": OnAjoutFestival(); break; ... |

I already structured my code as components:

PrintHeader(); Liste($rech_nom, $rech_naiss, $rech_deces, $rech_activite); PrintFooter(); |

Of course, the implementation isn't fancy: echoing concatenated strings for most of the code and ending php interpolation ?><html...><?php for static things.

Finding the performance bottleneck

Time to go back to the task at hand, we need to figure out why the front-page is so slow. My intuition is that there is a query that runs slowly, so I want to instrument all the mysql_query calls and log the time each one takes.

Thankfully, there is a database abstraction in place $db_mysql->query(...)! This kind of blows my mind! I probably got super annoyed that the mysql API was so hard to use. The abstraction code doesn't look like it was coded by me, I probably copy and pasted it from somewhere else 🙂

Since all the calls are centralized there, I just need to do

$t = microtime(true /* Seriously php ... */); // do the query print($query, (microtime(true) - $t) * 1000); |

But there's one little problem, how do I print? If I'm using echo, it's going to show it in the middle of the content and everything will look broken. I could buffer it to a global variable and print it at the end but there's a better solution: I can use JavaScript console.log!

function print() { echo '<script>console.log('; foreach (func_get_args() as $elem) { echo '"'.$elem.'", '; } echo '"");</script>'; // notice the "" in order to avoid dealing with trailing comma } |

At first I tried to use json_encode to be safe if there was a " in the query but guess what, this 10 years old version of PHP doesn't implement it!

I was expecting to see one query takes many seconds but I was a bit disconcerted when the most costly one would only take ~100ms. It turns out that this one was being executed 80 times in a row!

SELECT COUNT( * ) FROM `images` WHERE `from`='4020' AND `type`='2' 104ms SELECT COUNT( * ) FROM `images` WHERE `from`='4019' AND `type`='2' 117ms SELECT COUNT( * ) FROM `images` WHERE `from`='4019' AND `type`='2' 101ms SELECT COUNT( * ) FROM `images` WHERE `from`='4018' AND `type`='2' 125ms SELECT COUNT( * ) FROM `images` WHERE `from`='4018' AND `type`='2' 104ms ... 80 times ... |

This is a classical N+1 problem where we first query for the the elements in the list and then send one query for each one. The proper way to fix this is to refactor the code to merge the inner query in the outer one, but this is super annoying to do in practice.

At Facebook, we use GraphQL and Relay which solves this problem elegantly: it lets you write the queries in a nested fashion as it is in this example, but has a pre-process step that merges all those queries into one.

Make it fast

Anyway, I first wanted to figure out if I could optimize the query instead. It is just being used to check if an actor has at least one image of type 2 (a cover photo), it really shouldn't take 100ms.

I saw three possible improvements:

1) COUNT(*) is wasteful because we don't care about the total count, we just want to know if there's at least one. I learned the hard way that at Facebook, count is extremely expensive because you need to privacy check all the elements in order to know the real count. This means that count is as expensive as fetching all the elements. But in this case, it probably isn't doing such dramatic things.

2) While searching, I found someone writing that if you search for a string on an int field, mysql would be much much slower. That seemed to make sense so I removed ' around the value and unfortunately it made the query go 3 times slower!??!? This wasn't the quick win I was hoping to see.

3) I've read many times that adding an index is super important but had never done it before, so I google'd for it and found this command:

ALTER TABLE `images` ADD INDEX (`from`); |

After running this single line of code, the time it took went from 100ms to 0.5ms! So, instead of spending 8 seconds to run those 80 queries it only took 40ms. Problem solved.

Conclusion

Rewrite

Philippe asked several people to try and fix this problem before reaching out to me and their response was along the lines of: this website is built using ancient technology, you need to port it to <insert framework/language name>.

Rewriting is usually the first response but I've learned that it is usually not the best answer. In this case, it took one hour end to end to fix the issue. It would have taken a month+, a lot of money and time and disrupted Philippe flow to bring a new website for no obvious gains.

Precision

Looking back at my code, I can't help seeing many good patterns such as use of components, wrapping mysql calls into a helper, centralizing routing code... But, they were not being used consistently and there was also a lot of terrible patterns. So the fact that they were there is probably a mix of luck, intuition and part of trial and error.

After 10 years, I am now able to pick out every single pattern in this code and talk about the pros and cons and decide whether it would be a good or bad idea to use there. Experience made me a lot more confident and intentional when I code.

Practice makes perfect

If you were to give a similar task to my 14-years old self, I would probably have been able to figure out that I needed to instrument the mysql_query wrapper and add an index. But I bet it would have taken me multiple days to do that.

The reason is because it required me to execute a lot of auxiliary tasks such as

- Setup the dev environment to update files on a remote FTP on save.

- Figure out how to find out the code responsible for outputting an element on the screen.

- Write PHP code that can be run on a 10 years old version (we are spoiled with Hack at Facebook!).

- Implement a print function with variadic arguments that outputs console.log using a script tag and know that I didn't need to implement escaping.

- Connect to a mysql instance and select the right database in order to be able to run the query.

- ...

I know that I struggled with each of those for many days/weeks/months in the past, but at this point, I've done variations of those so many times that I have muscle memory for all those tasks. I also used Google extensively during this hour, not to learn, but to remind me how to do those tasks.

Final thoughts

It will be interesting to read this article in 10 years and figure out what I was not seeing while writing this article. It'll certainly be more meta 🙂